As optical fiber networks continue to form the backbone of modern broadband infrastructure, service expectations have never been higher. Customers demand uninterrupted voice calls, smooth video streaming, real-time gaming, and reliable enterprise connectivity. While bandwidth and latency are often the most discussed performance metrics, jitter — the variation in packet or signal timing — plays a critical role in determining real-world network quality.

For Internet Service Providers (ISPs), unmanaged jitter can quietly degrade customer experience, increase support tickets, and impact service-level commitments. Understanding how jitter occurs in optical networks and how to mitigate it is essential for delivering stable, high-performance services.

Understanding Jitter in Optical Networks

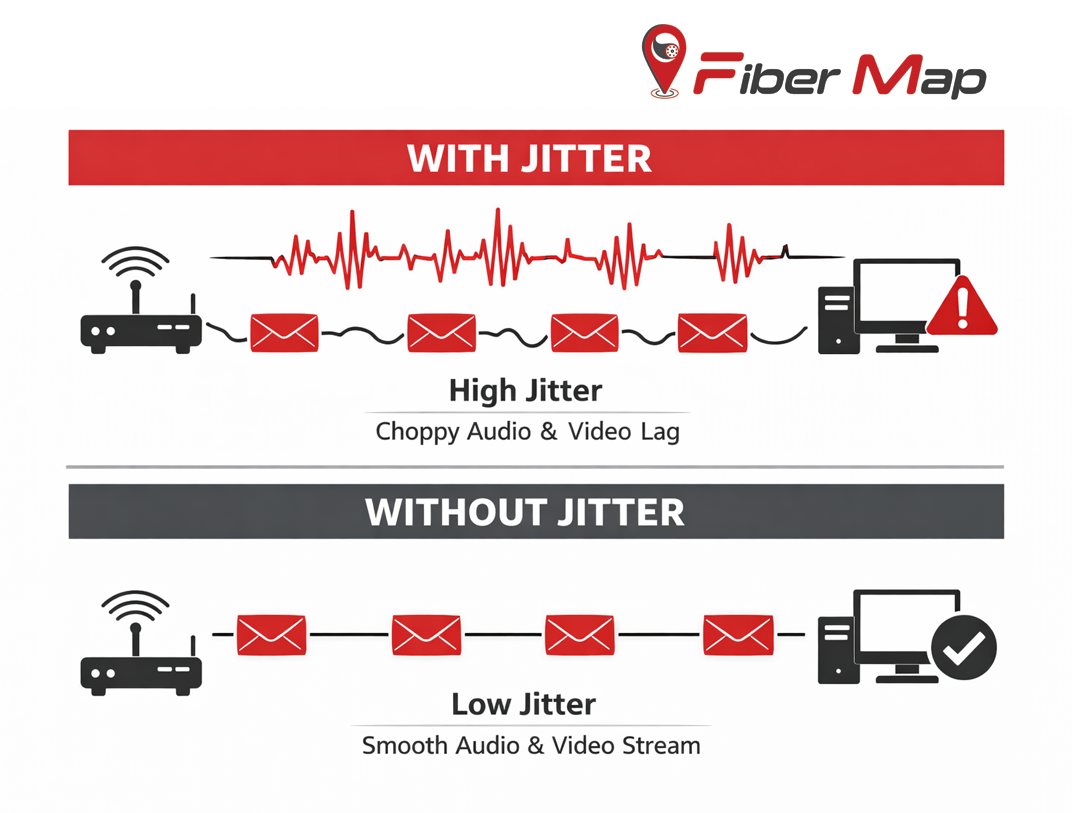

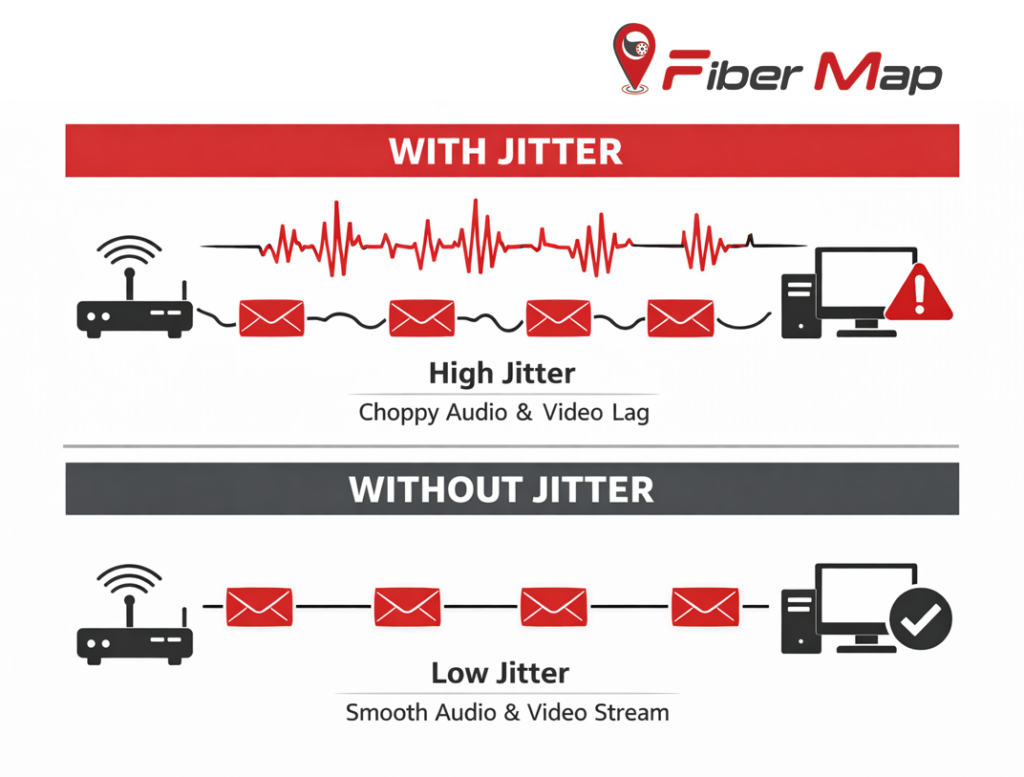

Jitter refers to the inconsistency in the arrival time of data packets or signal pulses. In an ideal network, packets travel at regular intervals from source to destination. In practice, those intervals fluctuate due to physical signal behavior and network processing delays.

Even small timing variations can have outsized effects on real-time applications. Voice and video services rely on consistent packet delivery. When jitter exceeds acceptable limits, users experience choppy audio, frozen video frames, buffering, or dropped connections. For business and mission-critical services, jitter can disrupt synchronization and reduce application reliability.

Key Causes of Jitter in Fiber-Based Infrastructure

Although fiber optics provide high speed and low attenuation, jitter can still be introduced at multiple points across the network.

Signal Dispersion

As optical signals travel over long distances, light pulses can spread due to wavelength-dependent speed differences within the fiber. This spreading causes pulses to arrive at slightly different times, increasing timing variation. Physical imperfections in the fiber can further amplify this effect.

Transceiver and Clock Instability

Optical transceivers rely on precise timing mechanisms to transmit and recover data. Any instability in laser sources, oscillators, or clock recovery circuits can introduce timing noise that accumulates across network hops.

Packet Queuing and Congestion

In packet-switched optical networks, traffic passes through routers, switches, and buffers. During peak usage or traffic bursts, packets may experience inconsistent queue delays. This variation directly manifests as jitter, particularly for shared access and aggregation networks.

Network Processing and Routing Behavior

Dynamic routing decisions, traffic shaping policies, and load balancing mechanisms can cause packets within the same data stream to experience different processing paths or delays, further increasing jitter.

Effective Strategies to Minimize Jitter

Reducing jitter requires a holistic approach, addressing both the physical transport layer and higher-level traffic behavior.

Strengthening the Physical Layer

A stable physical foundation significantly reduces jitter before traffic even reaches higher network layers.

Using high-quality fiber, connectors, and splicing techniques minimizes signal distortion and reflection. Modern fiber designs with tighter tolerances help preserve pulse integrity over long distances.

For long-haul and high-capacity links, dispersion compensation techniques can be applied to counteract pulse spreading. Advanced modulation formats and coherent transmission methods improve signal resilience and allow more accurate signal reconstruction at the receiving end.

Equally important is precision clock recovery. High-performance transceivers with robust clock data recovery mechanisms clean incoming signals and correct timing variations before retransmission, preventing jitter from compounding across the network.

Intelligent Traffic Prioritization

Even with a strong physical layer, jitter can rise sharply if traffic is not managed effectively.

Quality of Service (QoS) policies allow ISPs to prioritize delay-sensitive traffic such as voice, video, and interactive applications. By ensuring these packets are transmitted ahead of bulk or background traffic, packet timing remains more consistent.

Traffic shaping and rate control further smooth packet flows by preventing sudden bursts that overload buffers. This controlled delivery reduces queuing delays and stabilizes packet intervals.

In some scenarios, jitter buffers at the receiving end can help absorb short-term variations. These buffers temporarily store packets and release them at a steady pace, balancing timing consistency against acceptable latency.

Continuous Monitoring and Proactive Management

Jitter is not a static metric. Network conditions change constantly, making continuous visibility essential.

Monitoring tools that track packet delay variation and signal timing provide early insight into developing issues. Establishing baseline jitter thresholds for critical links helps teams identify anomalies before they affect customer experience.

Proactive alerts allow operations teams to intervene quickly, whether by adjusting traffic policies, reallocating capacity, or investigating physical link degradation. Regular performance audits and testing ensure that infrastructure remains aligned with service quality expectations as network demand grows.

Building Jitter-Resilient Optical Networks

For ISPs, reducing jitter is not about a single fix — it is about consistent engineering discipline across the network lifecycle. From fiber selection and optical design to traffic policies and monitoring frameworks, each layer plays a role in timing stability.

As networks evolve to support higher speeds, cloud services, and real-time applications, jitter control becomes increasingly critical. ISPs that proactively address jitter are better positioned to deliver superior service quality, reduce customer complaints, and support advanced digital services with confidence.

By treating jitter as a core performance metric rather than an afterthought, optical networks can achieve the stability required for today’s and tomorrow’s connectivity demands.